New research from MIT explores fire from a whole series of new perspectives. The research uses deep-learning approaches that extract the vibrational features of flames as flickering objects and renders them into sounds and materials.

The 19th-century physicist Michael Faraday was known not only for his seminal experimental contributions to electromagnetism but also for his public speaking. His annual Christmas lectures at the Royal Institution evolved into a holiday tradition that continues to this day. One of his most famous Christmas lectures concerned the chemical history of a candle. Faraday illustrated his points with a simple experiment: He placed a candle inside a lampglass in order to block out any breezes and achieve “a quiet flame.” Faraday then showed how the flame’s shape flickered and changed in response to perturbations.

“You must not imagine, because you see these tongues all at once, that the flame is of this particular shape,” Faraday observed. “A flame of that shape is never so at any one time. Never is a body of flame, like that which you just saw rising from the ball, of the shape it appears to you. It consists of a multitude of different shapes, succeeding each other so fast that the eye is only able to take cognizance of them all at once.”

Now, MIT researchers have brought Faraday’s simple experiment into the 21st century. Markus Buehler and his postdoc, Mario Milazzo, combined high-resolution imaging with deep machine learning to sonify a single candle flame. They then used that single flame as a basic building block, creating “music” out of its flickering dynamics and designing novel structures that could be 3D-printed into physical objects. Buehler described this and other related work at the American Physical Society meeting last week in Chicago.

MIT

As we’ve reported previously, Buehler specializes in developing AI models to design new proteins. He is perhaps best known for using sonification to illuminate structural details that might otherwise prove elusive. Buehler found that the hierarchical elements of music composition (pitch, range, dynamics, tempo) are analogous to the hierarchical elements of protein structure. Much like how music has a limited number of notes and chords and uses different combinations to compose music, proteins have a limited number of building blocks (20 amino acids) that can combine in any number of ways to create novel protein structures with unique properties. Each amino acid has a particular sound signature, akin to a fingerprint.

Several years ago, Buehler led a team of MIT scientists that mapped the molecular structure of proteins in spider silk threads onto musical theory to produce the “sound” of silk. The hope was to establish a radical new way to create designer proteins. That work inspired a sonification art exhibit, “Spider’s Canvas,” in Paris in 2018. Artist Tomas Saraceno worked with MIT engineers to create an interactive harp-like instrument inspired by the web of a Cyrtophora citricola spider, with each strand in the “web” tuned to a different pitch. Combine those notes in various patterns in the web’s 3D structure, and you can generate melodies.

In 2019, Buehler’s team developed an even more advanced system of making music out of a protein structure—and then converting the music back to create novel proteins not seen in nature. The aim was to learn to create similar synthetic spiderwebs and other structures that mimic the spider’s process. And in 2020, Buehler’s team applied the same approach to model the vibrational properties of the spike protein responsible for the high contagion rate of the novel coronavirus (SARS-CoV-2).

Markus Buehler

Buehler pondered whether this approach could be expanded enough to study fire. “Flames, of course, are silent,” he said during a press conference. However, “Fire has all the elements of a vibrating string or vibrating molecule but in a dynamic pattern that’s interesting. If we could hear them, what would they sound like? Can we materialize fire? Can we push the envelope to generate bio-inspired materials that you could actually feel and touch from that?”

Like Faraday centuries before, Buehler and Milazzo started with a simple experiment involving a single candle flame. (A larger fire will have so many perturbations that it becomes computationally too difficult, but a single flame can be viewed as a basic building block of fire.) The researchers lit a candle in a controlled environment, with no air movement or any other external signals—Faraday’s quiet flame. Then they played sounds from a speaker and used a high-speed camera to capture how the flame flickered and deformed over time in response to those acoustic signals.

Assembly simulation of flames into a princess in a fairytale garden.

Markus Buehler and Mario Milazzo, MIT

“There are characteristic shapes that are created by this, but they are not the same shapes every time,” Buehler said. “This is a dynamical process, so what you see [in our images] is just a snapshot of these. In reality, there are thousands and thousands of images for each expectation of the acoustic signal—a circle of fire.”

He and Milazzo next trained a neural network to classify the original audio signals that created a given flame shape. The researchers effectively sonified the vibrational frequencies of fire. The more violently a flame deflects, the more dramatically the audio signal changes. The flame becomes a kind of musical instrument, which we can “play” by exposing it to air currents, for example, in order to get the flame to flicker in particular ways—a form of musical composition.

“Fire is vibrational, rhythmic and repetitive, and continuously changing, and this is what defines music,” said Buehler. “Deep learning helps us to mine the data and particular patterns of fire, and with different patterns in fire, you can create this orchestra of different sounds.”

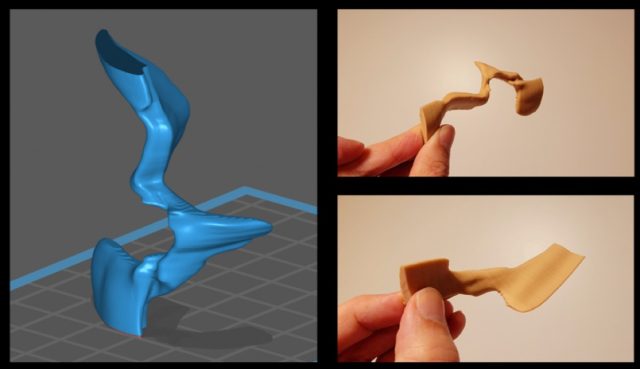

Buehler and Milazzo have also used the various shapes of flickering flames as building blocks to design novel structures on the computer and then 3D-print those structures. “It’s a bit like freezing a fire’s flame in time and being able to look at it from different angles,” said Buehler. “You can touch it, rotate it, and the other thing you can do is look inside the flames, which is something that no human has ever seen.”