The world of magic had Houdini, who pioneered tricks that are still performed today. And data compression has Jacob Ziv.

In 1977, Ziv, working with Abraham Lempel, published the equivalent of

Houdini on Magic: a paper in the IEEE Transactions on Information Theory titled “A Universal Algorithm for Sequential Data Compression.” The algorithm described in the paper came to be called LZ77—from the authors’ names, in alphabetical order, and the year. LZ77 wasn’t the first lossless compression algorithm, but it was the first that could work its magic in a single step.

The following year, the two researchers issued a refinement, LZ78. That algorithm became the basis for the Unix compress program used in the early ’80s; WinZip and Gzip, born in the early ’90s; and the GIF and TIFF image formats. Without these algorithms, we’d likely be mailing large data files on discs instead of sending them across the Internet with a click, buying our music on CDs instead of streaming it, and looking at Facebook feeds that don’t have bouncing animated images.

Ziv went on to partner with other researchers on other innovations in compression. It is his full body of work, spanning more than half a century, that earned him the

2021 IEEE Medal of Honor “for fundamental contributions to information theory and data compression technology, and for distinguished research leadership.”

Ziv was born in 1931 to Russian immigrants in Tiberias, a city then in British-ruled Palestine and now part of Israel. Electricity and gadgets—and little else—fascinated him as a child. While practicing violin, for example, he came up with a scheme to turn his music stand into a lamp. He also tried to build a Marconi transmitter from metal player-piano parts. When he plugged the contraption in, the entire house went dark. He never did get that transmitter to work.

When the Arab-Israeli War began in 1948, Ziv was in high school. Drafted into the Israel Defense Forces, he served briefly on the front lines until a group of mothers held organized protests, demanding that the youngest soldiers be sent elsewhere. Ziv’s reassignment took him to the Israeli Air Force, where he trained as a radar technician. When the war ended, he entered Technion—Israel Institute of Technology to study electrical engineering.

After completing his master’s degree in 1955, Ziv returned to the defense world, this time joining Israel’s National Defense Research Laboratory (now

Rafael Advanced Defense Systems) to develop electronic components for use in missiles and other military systems. The trouble was, Ziv recalls, that none of the engineers in the group, including himself, had more than a basic understanding of electronics. Their electrical engineering education had focused more on power systems.

“We had about six people, and we had to teach ourselves,” he says. “We would pick a book and then study together, like religious Jews studying the Hebrew Bible. It wasn’t enough.”

The group’s goal was to build a telemetry system using transistors instead of vacuum tubes. They needed not only knowledge, but parts. Ziv contacted Bell Telephone Laboratories and requested a free sample of its transistor; the company sent 100.

“That covered our needs for a few months,” he says. “I give myself credit for being the first one in Israel to do something serious with the transistor.”

In 1959, Ziv was selected as one of a handful of researchers from Israel’s defense lab to study abroad. That program, he says, transformed the evolution of science in Israel. Its organizers didn’t steer the selected young engineers and scientists into particular fields. Instead, they let them pursue any type of graduate studies in any Western nation.

“In order to run a computer program at the time, you had to use punch cards and I hated them. That is why I didn’t go into real computer science.”

Ziv planned to continue working in communications, but he was no longer interested in just the hardware. He had recently read

Information Theory (Prentice-Hall, 1953), one of the earliest books on the subject, by Stanford Goldman, and he decided to make information theory his focus. And where else would one study information theory but MIT, where Claude Shannon, the field’s pioneer, had started out?

Ziv arrived in Cambridge, Mass., in 1960. His Ph.D. research involved a method of determining how to encode and decode messages sent through a noisy channel, minimizing the probability and error while at the same time keeping the decoding simple.

“Information theory is beautiful,” he says. “It tells you what is the best that you can ever achieve, and [it] tells you how to approximate the outcome. So if you invest the computational effort, you can know you are approaching the best outcome possible.”

Ziv contrasts that certainty with the uncertainty of a deep-learning algorithm. It may be clear that the algorithm is working, but nobody really knows whether it is the best result possible.

While at MIT, Ziv held a part-time job at U.S. defense contractor

Melpar, where he worked on error-correcting software. He found this work less beautiful. “In order to run a computer program at the time, you had to use punch cards,” he recalls. “And I hated them. That is why I didn’t go into real computer science.”

Back at the Defense Research Laboratory after two years in the United States, Ziv took charge of the Communications Department. Then in 1970, with several other coworkers, he joined the faculty of Technion.

There he met Abraham Lempel. The two discussed trying to improve lossless data compression.

The state of the art in lossless data compression at the time was Huffman coding. This approach starts by finding sequences of bits in a data file and then sorting them by the frequency with which they appear. Then the encoder builds a dictionary in which the most common sequences are represented by the smallest number of bits. This is the same idea behind Morse code: The most frequent letter in the English language, e, is represented by a single dot, while rarer letters have more complex combinations of dots and dashes.

Huffman coding, while still used today in the MPEG-2 compression format and a lossless form of JPEG, has its drawbacks. It requires two passes through a data file: one to calculate the statistical features of the file, and the second to encode the data. And storing the dictionary along with the encoded data adds to the size of the compressed file.

Ziv and Lempel wondered if they could develop a lossless data-compression algorithm that would work on any kind of data, did not require preprocessing, and would achieve the best compression for that data, a target defined by something known as the Shannon entropy. It was unclear if their goal was even possible. They decided to find out.

Ziv says he and Lempel were the “perfect match” to tackle this question. “I knew all about information theory and statistics, and Abraham was well equipped in Boolean algebra and computer science.”

The two came up with the idea of having the algorithm look for unique sequences of bits at the same time that it’s compressing the data, using pointers to refer to previously seen sequences. This approach requires only one pass through the file, so it’s faster than Huffman coding.

Ziv explains it this way: “You look at incoming bits to find the longest stretch of bits for which there is a match in the past. Let’s say that first incoming bit is a 1. Now, since you have only one bit, you have never seen it in the past, so you have no choice but to transmit it as is.”

“But then you get another bit,” he continues. “Say that’s a 1 as well. So you enter into your dictionary 1-1. Say the next bit is a 0. So in your dictionary you now have 1-1 and also 1-0.”

Here’s where the pointer comes in. The next time that the stream of bits includes a 1-1 or a 1-0, the software doesn’t transmit those bits. Instead it sends a pointer to the location where that sequence first appeared, along with the length of the matched sequence. The number of bits that you need for that pointer is very small.

“Information theory is beautiful. It tells you what is the best that you can ever achieve, and (it) tells you how to approximate the outcome.”

“It’s basically what they used to do in publishing

TV Guide,” Ziv says. “They would run a synopsis of each program once. If the program appeared more than once, they didn’t republish the synopsis. They just said, go back to page x.”

Decoding in this way is even simpler, because the decoder doesn’t have to identify unique sequences. Instead it finds the locations of the sequences by following the pointers and then replaces each pointer with a copy of the relevant sequence.

The algorithm did everything Ziv and Lempel had set out to do—it proved that universally optimum lossless compression without preprocessing was possible.

“At the time they published their work, the fact that the algorithm was crisp and elegant and was easily implementable with low computational complexity was almost beside the point,” says Tsachy Weissman, an electrical engineering professor at Stanford University who specializes in information theory. “It was more about the theoretical result.”

Eventually, though, researchers recognized the algorithm’s practical implications, Weissman says. “The algorithm itself became really useful when our technologies started dealing with larger file sizes beyond 100,000 or even a million characters.”

“Their story is a story about the power of fundamental theoretical research,” Weissman adds. “You can establish theoretical results about what should be achievable—and decades later humanity benefits from the implementation of algorithms based on those results.”

Ziv and Lempel kept working on the technology, trying to get closer to entropy for small data files. That work led to LZ78. Ziv says LZ78 seems similar to LZ77 but is actually very different, because it anticipates the next bit. “Let’s say the first bit is a 1, so you enter in the dictionary two codes, 1-1 and 1-0,” he explains. You can imagine these two sequences as the first branches of a tree.”

“When the second bit comes,” Ziv says, “if it’s a 1, you send the pointer to the first code, the 1-1, and if it’s 0, you point to the other code, 1-0. And then you extend the dictionary by adding two more possibilities to the selected branch of the tree. As you do that repeatedly, sequences that appear more frequently will grow longer branches.”

“It turns out,” he says, “that not only was that the optimal [approach], but so simple that it became useful right away.”

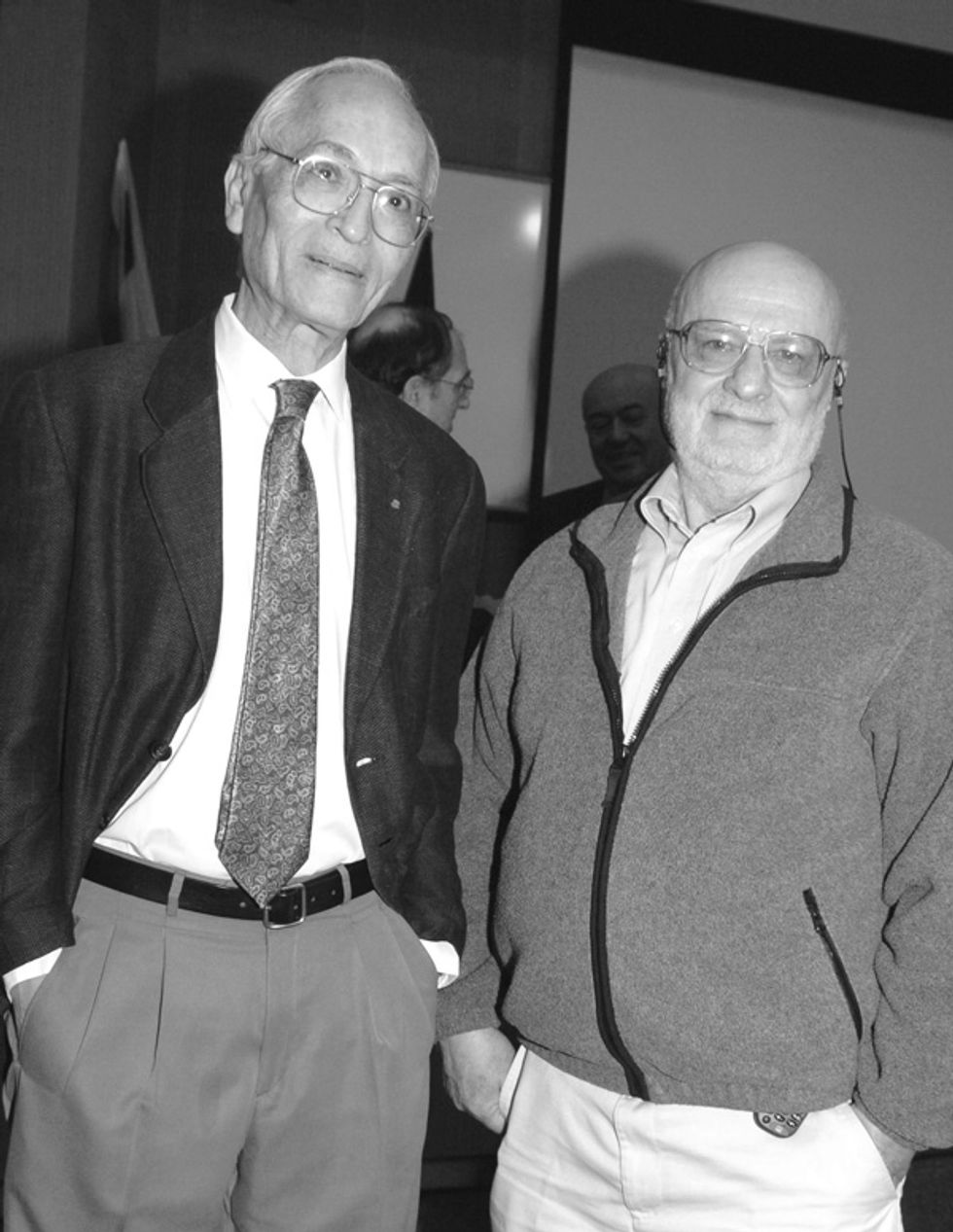

Jacob Ziv (left) and Abraham Lempel published algorithms for lossless data compression in 1977 and 1978, both in the IEEE Transactions on Information Theory. The methods became known as LZ77 and LZ78 and are still in use today.

Photo: Jacob Ziv/Technion

While Ziv and Lempel were working on LZ78, they were both on sabbatical from Technion and working at U.S. companies. They knew their development would be commercially useful, and they wanted to patent it.

“I was at Bell Labs,” Ziv recalls, “and so I thought the patent should belong to them. But they said that it’s not possible to get a patent unless it’s a piece of hardware, and they were not interested in trying.” (The U.S. Supreme Court didn’t open the door to direct patent protection for software until the 1980s.)

However, Lempel’s employer, Sperry Rand Corp., was willing to try. It got around the restriction on software patents by building hardware that implemented the algorithm and patenting that device. Sperry Rand followed that first patent with a version adapted by researcher Terry Welch, called the LZW algorithm. It was the LZW variant that spread most widely.

Ziv regrets not being able to patent LZ78 directly, but, he says, “We enjoyed the fact that [LZW] was very popular. It made us famous, and we also enjoyed the research it led us to.”

One concept that followed came to be called Lempel-Ziv complexity, a measure of the number of unique substrings contained in a sequence of bits. The fewer unique substrings, the more a sequence can be compressed.

This measure later came to be used to check the security of encryption codes; if a code is truly random, it cannot be compressed. Lempel-Ziv complexity has also been used to analyze electroencephalograms—recordings of electrical activity in the brain—to

determine the depth of anesthesia, to diagnose depression, and for other purposes. Researchers have even applied it to analyze pop lyrics, to determine trends in repetitiveness.

Over his career, Ziv published some 100 peer-reviewed papers. While the 1977 and 1978 papers are the most famous, information theorists that came after Ziv have their own favorites.

For Shlomo Shamai, a distinguished professor at Technion, it’s the 1976 paper that introduced

the Wyner-Ziv algorithm, a way of characterizing the limits of using supplementary information available to the decoder but not the encoder. That problem emerges, for example, in video applications that take advantage of the fact that the decoder has already deciphered the previous frame and thus it can be used as side information for encoding the next one.

For Vincent Poor, a professor of electrical engineering at Princeton University, it’s the 1969 paper describing

the Ziv-Zakai bound, a way of knowing whether or not a signal processor is getting the most accurate information possible from a given signal.

Ziv also inspired a number of leading data-compression experts through the classes he taught at Technion until 1985. Weissman, a former student, says Ziv “is deeply passionate about the mathematical beauty of compression as a way to quantify information. Taking a course from him in 1999 had a big part in setting me on the path of my own research.”

He wasn’t the only one so inspired. “I took a class on information theory from Ziv in 1979, at the beginning of my master’s studies,” says Shamai. “More than 40 years have passed, and I still remember the course. It made me eager to look at these problems, to do research, and to pursue a Ph.D.”

In recent years, glaucoma has taken away most of Ziv’s vision. He says that a paper published in IEEE Transactions on Information Theory this January is his last. He is 89.

“I started the paper two and a half years ago, when I still had enough vision to use a computer,” he says. “At the end, Yuval Cassuto, a younger faculty member at Technion, finished the project.” The paper discusses situations in which large information files need to be transmitted quickly to remote databases.

As Ziv explains it, such a need may arise when a doctor wants to compare a patient’s DNA sample to past samples from the same patient, to determine if there has been a mutation, or to a library of DNA, to determine if the patient has a genetic disease. Or a researcher studying a new virus may want to compare its DNA sequence to a DNA database of known viruses.

“The problem is that the amount of information in a DNA sample is huge,” Ziv says, “too much to be sent by a network today in a matter of hours or even, sometimes, in days. If you are, say, trying to identify viruses that are changing very quickly in time, that may be too long.”

The approach he and Cassuto describe involves using known sequences that appear commonly in the database to help compress the new data, without first checking for a specific match between the new data and the known sequences.

“I really hope that this research might be used in the future,” Ziv says. If his track record is any indication, Cassuto-Ziv—or perhaps CZ21—will add to his legacy.

This article appears in the May 2021 print issue as “Conjurer of Compression.”