Measuring How Impactful Your Training Is

Organizations spend a lot of resources and effort in building training solutions for their employees and customers. If you are involved in planning, sourcing, or building training content, you probably wonder about measuring how impactful your training has been. The measurement can be broadly divided into two main categories, which is what most social science research uses:

1. Qualitative Analysis

This measures nonnumerical changes, such as the learners’ experience and observed behavior, answering questions such as:

- Did it achieve its stated objectives?

- Did people find it helpful?

- Did it move the needle in terms of performance, knowledge, or attitudinal goals?

- How did people use the discussion forum?

2. Quantitative Analysis

This measures numerical changes, such as an increase in sales, answering questions such as:

- How many people took the course?

- How long did they spend on it?

- How many made it to the end?

- Which videos were played most?

- Which pages were skipped?

- Did people use the discussion forum?

As winners of multiple awards for learner impact and learning transfer, we tackle this analysis for our clients regularly. Here are a few pointers to get you started on measuring the effectiveness of learning interventions in your organization.

1. What To Measure?

You want to compare the outcomes of the course with the learning objectives. This is a simple statement, but one we must keep front and center. Was the primary purpose of your in-person training to feed the attendees? If not, why should the survey ask how the food was? Similarly, if the health and safety e-course you rolled out on your LMS last month was about increasing awareness of company protocols, then you should focus on measuring the change in awareness.

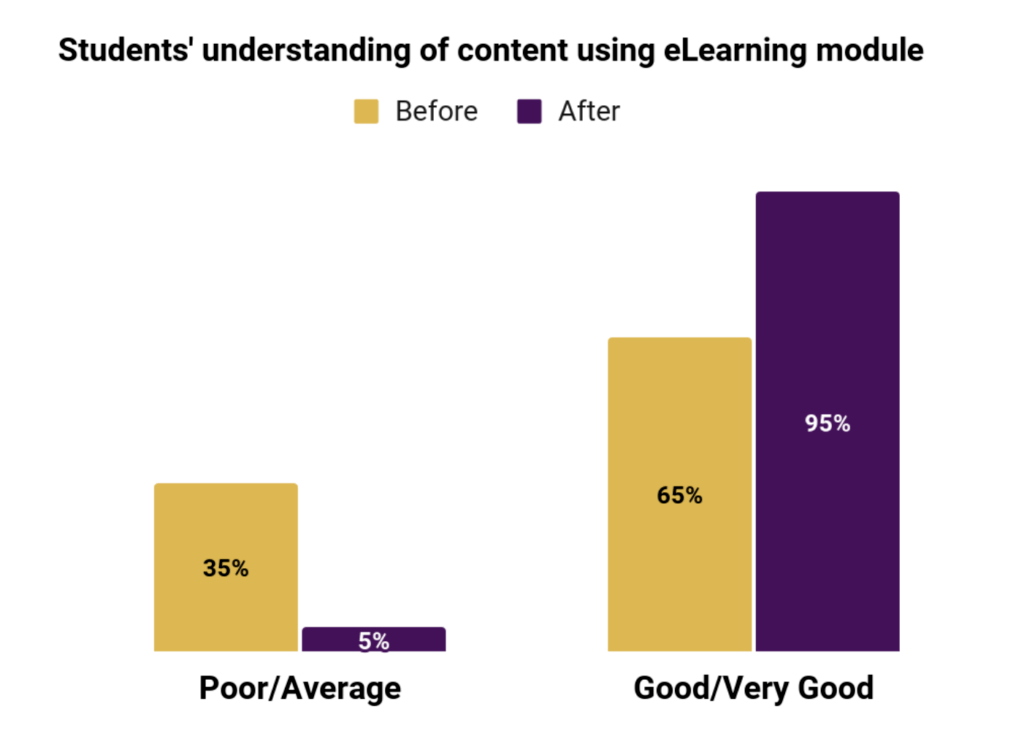

Measuring change requires, by definition, a comparison of two parameters—the control or initial reading prior to the training, and the final reading after the training. This is very doable when you update a training course completely. For example, this data from one of our clients shows before and after metrics of their confidence in their skills.

Another point to keep in mind is that measuring the effectiveness of training is inherently different from assessing your learners in a course. So make sure your yardstick is for the training, not for the trainees. A large sample size is the best way to rule out potential outliers. Mindful phrasing of questions can be very effective too. Consider the difference between: “Who should you report a workplace harassment incident to?” versus “Did the course help you understand the reporting protocols of the hospital?”

2. When To Measure?

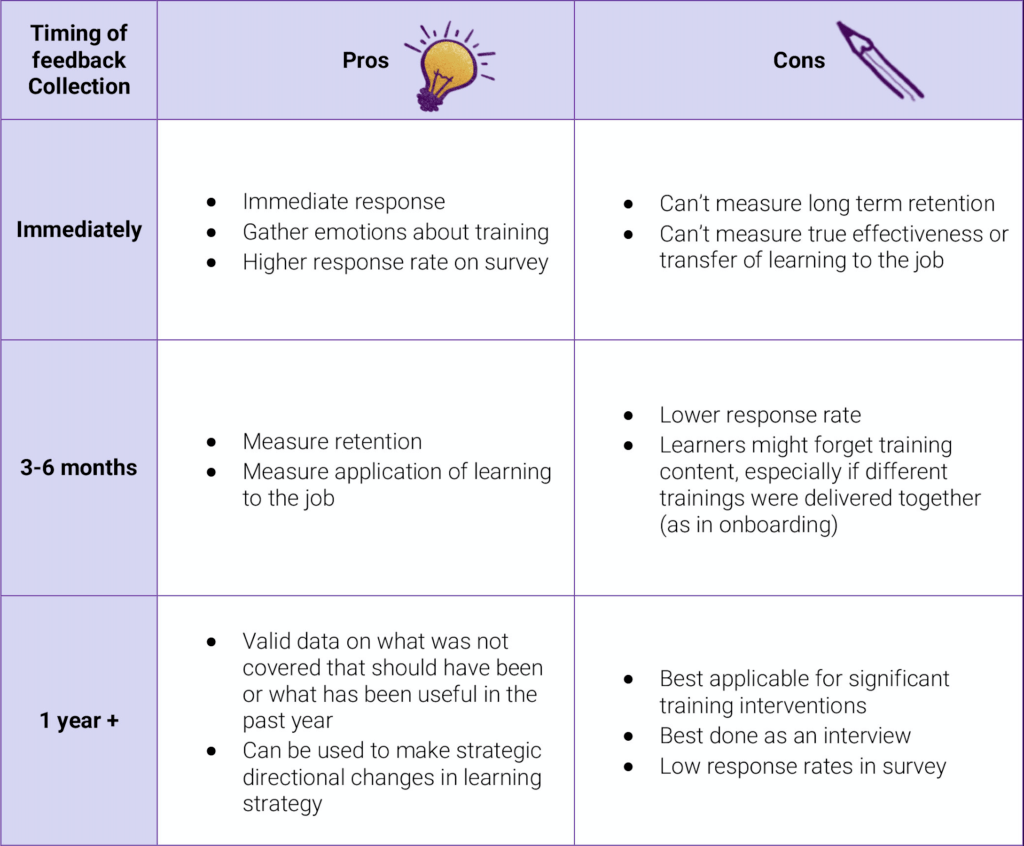

The answer here varies based on the type of training, resources, and the purpose of the training. In most cases, a more or less immediate survey within the first week of completing the course is a good idea. This ensures you are getting some granular details while the training is still fresh in the mind of learners.

Typically, you’d want your learners to retain the knowledge, skills, and attitude that you worked so hard for them to learn. A short survey at a 3–6 month period and another at the 1–year mark would make sense in this case. You’ll need to make sure your questions reflect the time that has passed since the training. These long-gap surveys are incredibly useful for getting some valuable insights into the course content. Consider questions such as “What content helped you in your job in the last year?” and “What else do you wish we had covered?”

One more word of advice—be prepared for a much smaller rate of completion. People don’t usually jump toward providing feedback on training they took months ago. This might factor into the way you administer your measurement.

3. How To Measure?

There is plenty of bad rep around standard surveys, also known in the industry as smile sheets, and there are good reasons for that. Facilitators have used these surveys to validate the results they’re looking for, and questions are not often valid measures of success with the training. However, asynchronous, stand-alone surveys are the most painless way to collate and make sense of large amounts of participant feedback. If you have a large learner base, sometimes this is the only practical way to gather feedback.

Creating effective survey questions is a science, with many academic papers and books devoted to this topic. But a quick tip: consider staying away from leading questions such as “How has your knowledge of conflict handling procedures improved as a result of this training?” which assumes the knowledge has indeed increased. The survey can be sent manually within a week of the trigger (usually course completion), or automatically at course completion. It could be a link that becomes available in your LMS at the opportune moment.

Alternatively, or additionally, the training could be measured through learner or manager interviews, or through measuring the actual change in behavior or trends in data metrics such as escalated calls, faulty widgets, or employee complaints. Where possible, these metrics are a wonderful way to directly correlate your training intervention with the impact in your organization and should be pursued. So, how are you measuring training at your place? How do you know if your training dollars are indeed moving the needle and helping the business? Let us know—we’d love to hear from you!

Artha Learning Inc

Artha is a full-service learning design firm. We partner with organizations to design their digital learning initiatives from instructional, engagement and technical point of view.

Originally published at arthalearning.com.